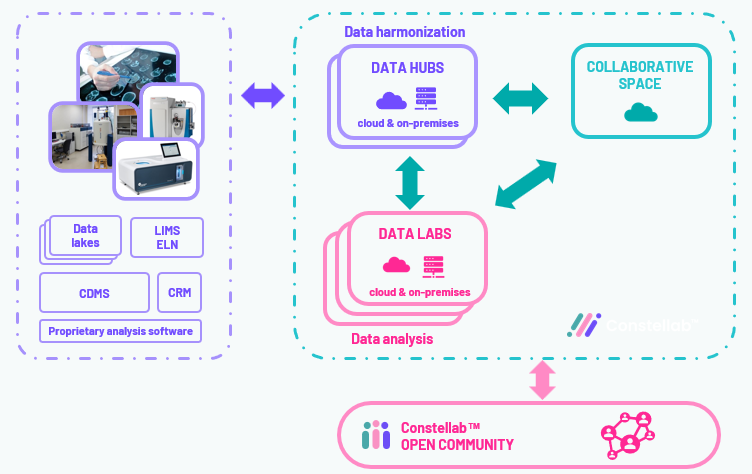

This document shows the global architecture of Constellab in a technical way.

Collaborative space

Here is the architecture for a Collaborative Space.

Collaborative space

The collaborative space is hosted on the cloud by Constellab. In a collaborative space, you can have has many data hub and data labs as you want. The collaborative space contains all the project information and receives reports and experiments from the different data labs or data hub. The experiments, reports and documents of the collaborative space can be stored in the cloud (S3 storage) or in a data hub.

Data hub

The data hub is a special lab highly connected to the ecosystem. Its role is to harmonize and store the data from any sources (raw data, analyzed data, project documents...).

It connects to other labs, collaborative space and external data source (S3 storage, equipment). It can be manage on the cloud or on-premises. It is meant to be always accessible as this store the data of the entreprise.

Data lab

A data lab is a lab to run data analyses and write report. Once the analyse finished and report written, the results can be transferred to the data hub for long terme storage and the report transferred to the collaborative space to have a continuous access to it.

It can be manage on the cloud or on-premises. Cloud labs can be started and stopped on demand to manage the usage of the cloud.

External source

Constellab can interact with external sources via the data hubs and data labs. It can retrieve or store data in an external data lake, S3 service, FTP... It can also connect with equipment to extract raw data from it or manage it if the equipment allows it.

Constellab Community

The collaborative space, the data hubs and data labs are highly connected with Constellab Community. With Constellab Community you can access bricks and live tasks stored publicly or in private for your Collaborative space.

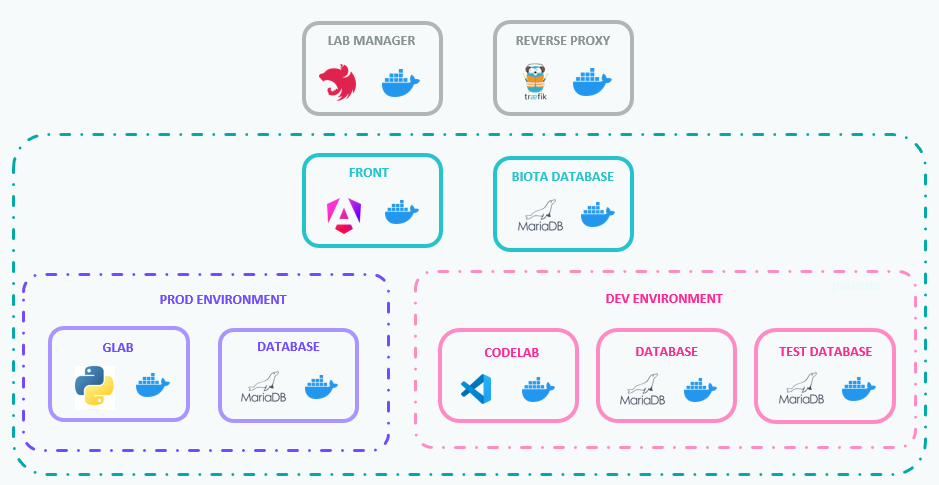

Data Lab / Data hub architecture

The data lab and data hub depends on Docker technology to be agnostic and being easily deployed in any cloud or on premise. From now on, I will talk about the data lab but everything is also true for the data hub. Each data lab or data hub is autonomous and can be used by itself. Although with the Constellab ecosystem you unlock the true usage of the data lab and data hub.

Here is the architecture of the docker containers running inside a data lab.

Lab manager

The lab manager is a service running inside the data lab to manage it. It is responsible for all containers in the green square (all except form the Reverse proxy). The lab manager has multiple roles :

- install, start, stop the containers of the data lab

- configure the bricks of the data lab

- backup the data lab

- send information about the data lab to the collaborative space

With the reverse proxy, it is the first containers to start on the server.

Reverse proxy

The reverse proxy (using traefik) is responsible for redirecting HTTPS request of sub domain to the correct container inside the server of the data lab. A wildcard DNS record (like *.lab.constellab.app) must point to the data lab server in order for the reverse proxy to create sub domain (like app.lab.constellab.app).

Front

The front container is responsible for serving the web interface for the prod and the dev environment.

Biota database

This is a special containers added to the data lab only when the biota brick is configured in the data lab. If the brick is added, this container is started with the complete Biota database (~10GB) and is accessible in read only mode from the prod and dev environment.

Prod environment

This is the default environment used when you log into your data lab. This environment is completely separated from the dev environment and it has his own glab service and database.

The glab service is the python server running all the data analyse and interaction with the web interface. Bricks are installed inside the glab service.

Dev environmet

The dev environment container the Codelab and 2 database. One database for the dev environment and one database to run tests on a brick. This environment is completely separated from the prod environment.

The Codelab is a fully integrated and pre-configured VsCode available online. With this Vscode you can develop bricks, run jupyter notebooks, analyse data... In the Codelab you can also start the dev environment which allows you to use the web interface and run analyses in a seperated environment from the production.